Will Your Uploaded Mind Still Be You? —Michael Graziano

On August 18, 2019, I posted “On Being a Copy of Someone's Mind.” I was intrigued to see from the teaser for Michael Graziano’s new book Rethinking Consciousness: A Scientific Theory of Subjective Experience, published as an op-ed in the Wall Street Journal on September 13, 2019, that Michael Graziano has been thinking along similar lines.

Michael explains the the process of copying someone’s mind (obviously not doable by human technology yet!) this way:

To upload a person’s mind, at least two technical challenges would need to be solved. First, we would need to build an artificial brain made of simulated neurons. Second, we would need to scan a person’s actual, biological brain and measure exactly how its neurons are connected to each other, to be able to copy that pattern in the artificial brain. Nobody knows if those two steps would really re-create a person’s mind or if other, subtler aspects of the biology of the brain must be copied as well, but it is a good starting place.

Michael nicely describes the experience of the copy, which following Robin Hanson I call an “em,” short for “brain emulation” in “On Being a Copy of Someone's Mind”:

Suppose I decide to have my brain scanned and my mind uploaded. Obviously, nobody knows what the process will really entail, but here’s one scenario: A conscious mind wakes up. It has my personality, memories, wisdom and emotions. It thinks it’s me. It can continue to learn and remember, because adaptability is the essence of an artificial neural network. Its synaptic connections continue to change with experience.

Sim-me (that is, simulated me) looks around and finds himself in a simulated, videogame environment. If that world is rendered well, it will look pretty much like the real world, and his virtual body will look like a real body. Maybe sim-me is assigned an apartment in a simulated version of Manhattan, where he lives with a whole population of other uploaded people in digital bodies. Sim-me can enjoy a stroll through the digitally rendered city on a beautiful day with always perfect weather. Smell, taste and touch might be muted because of the overwhelming bandwidth required to handle that type of information. By and large, however, sim-me can think to himself, “Ah, that upload was worth the money. I’ve reached the digital afterlife, and it’s a safe and pleasant place to live. May the computing cloud last indefinitely!”

Then Michael goes on to meditate on the fact that there can then be two of me and whether that makes the copy not-you. Here is what I said on that, in “On Being a Copy of Someone's Mind”:

On the assumption that experience comes from particles and fields known to physics (or of the same sort as those known to physics now), and that the emulation is truly faithful, there is nothing hidden. An em that is a copy of you will feel that it is a you. Of course, if you consented to the copying process, an em that is a copy of you will have that memory, which is likely to make it aware that there is now more than one of you. But that does NOT make it not-you.

You might object that the lack of physical continuity makes the em copy of you not-you. But our sense of physical continuity with our past selves is largely an illusion. There is substantial turnover in the particular particles in us. Similarity of memory—memory now being a superset of memory earlier, minus some forgetting—is the main thing that makes me think I am the same person as a particular human being earlier in time.

…

… after the copying event these two lines of conscious experience are isolated from one another as any two human beings are mentally isolated from one another. But these two consciousnesses that don’t have the same experience after the split are both me, with a full experience of continuity of consciousness from the past me. If one of these consciousnesses ends permanently, then one me ends but the other me continues. It is possible to both die and not die.

The fact that there can be many lines of subjectively continuous consciousness that are all me may seem strange, but it may be happening all the time anyway given the implication of quantum equations taken at face value that all kinds of quantum possibilities all happen. (This is the “Many-Worlds Interpretation of Quantum Mechanics.”)

In other words, it may seem strange that there could be two of you, but that doesn’t make either of them not-you.

Michael points out that the digital world the copy lives in (if the copy is not embedded in a physical robot) will interact with the physical world we live in:

He may live in the cloud, with a simulated instead of a physical body, but his leverage on the real world would be as good as anyone else’s. We already live in a world where almost everything we do flows through cyberspace. We keep up with friends and family through text and Twitter, Facebook and Skype. We keep informed about the world through social media and internet news. Even our jobs, some of them at least, increasingly exist in an electronic space. As a university professor, for example, everything I do, including teaching lecture courses, writing articles and mentoring young scientists, could be done remotely, without my physical presence in a room.

The same could be said of many other jobs—librarian, CEO, novelist, artist, architect, member of Congress, President. So a digital afterlife, it seems to me, wouldn’t become a separate place, utopian or otherwise. Instead, it would become merely another sector of the world, inhabited by an ever-growing population of citizens just as professionally, socially and economically connected to social media as anyone else.

He goes on to think about whether ems, derived from copying of minds, would have a power advantage over flesh-and-blood humans, and goes on to wonder if a digital afterlife has the same kind of motivational consequences as telling people about heaven and hell. What he misses is Robin Hanson’s incisive economic analysis in the The Age of Em that, because of the low cost of copying and running ems, there could easily be trillions of ems and only billions of flesh-and-blood humans once copying of minds gets going. There could be many copies derived from a single flesh-and-blood human, but with different experiences after the copying event from the flesh-and-blood human. (There would be the most copies of those people who are the most productive.) I think Robin’s analysis is right. That means that ems would be by far the most common type of human being. Fortunately, they are likely to have a lot of affection for the flesh-and-blood human beings they were copied from and for other flesh-and-blood human beings they remember from their remembered from their past as flesh-and-blood human beings. (However, some ems might be copied from infants and spend almost all of their remembered life in cyberspace.)

Michael also writes about how brain emulation could make interstellar travel possible. He talks about many ems keeping each other company on an interstellar journey, but the equivalent of suspended animation is a breeze for for ems, so there is no need for ems to be awake during the journey at all. Having many backups of each type of em can make the journey safer, as well. The other thing that makes interstellar travel easier is that, upon arrival in another solar system or other faraway destination, when physical action is necessary, the robots some of the ems are embedded in to do those actions can be quite small.

But while interstellar travel becomes much easier with ems, Robin Hanson argues that the bulk of em history would take place before ems had a chance to get to other solar systems: it is likely to be easy and economically advantageous to speed up many ems to a thousand or even a million times the speed of flesh-and-blood human beings. At those speeds, the time required for interstellar travel seems much longer. Ems would want to go to the stars for the adventure and for the honor of being founders and progenitors in those new environments, but in terms of subjective time, a huge amount of time would have passed for the ems back home on Earth before any report from the interstellar travelers could ever be received.

Robin Hanson argues that, while many things would be strange to us in The Age of Em, that most ems would think “It’s good to be an em.” (Of course, their word for it would likely be different from “em.”) I agree. I think few ems would want to be physical human beings living in our age. Just as from our perspective now (if enlightened by a reasonably accurate knowledge of history), the olden days will be the bad old days. I, for one, would love to experience The Age of Em as an em. It opens up so many possibilities for life!

I know that an accurate copy of my mind would feel it was me, and I, the flesh-and-blood Miles, consider any such being that comes to exist to be me. In Robin’s economic analysis, the easy of copying ems leads to stiff competition among workers, so even if a copy of me were there in The Age of Em, I wouldn’t expect there to be many copies of me. I very much doubt that I would be among the select few who had billions of copies, or even a thousand copies. But I figure that at worst, a handful of copies could make a living as guinea pigs for social science research, where diversity of human beings subjects come from makes things interesting. And if the interest rates in The Age of Em are as high as Robin thinks they would be, by strenuous saving, the ems that are me might be able to save up enough money after a time or working in order to not have to work any more.

Measuring Learning Outcomes from Getting an Economics Degree

This post both gives a rundown of what I view as key concepts in economics for undergraduates and discusses how to measure whether students have learned them.

I am proud of my Economics Department here at the University of Colorado Boulder. We have been talking about measuring learning outcomes quite seriously. We have three measurement tools in mind:

A department-wide quiz that will be something like 15 multiple choice questions administered in every economics class. Although we are still deciding exactly what it will look like, here are some possible details we talked about so far:

We need to do it in classes for logistical reasons: we have no other proctoring machinery at that scale.

We want it to be a low-stakes test because we can better measure what the students have in long-term memory if we give no incentive to study for it. Therefore, there will be no consequences for getting the wrong answer.

We are deferring any differentiation of the department-wide quiz by which class a student is taking. Initially at least, it will be the same in all economics classes and a mandated part of all economics classes. (One exception: we have not yet thought hard about whether the department-wide quiz should be given in the Principles classes. The argument for is that it would be a learning experience for the students. Also, it would provide some baseline data. But our primary data-collection interest is in learning about our majors.)

We haven’t discussed this, but the door is open to having some penalty for not taking a certain number of these quizzes before graduation; that is basically a penalty for low attendance at class. The penalty could simply be going to a special administration of all the quizzes that were given during their years in the major. This make-up quiz would provide valuable evidence about selection in who attends classes.

We are OK with students taking the same quiz more than once because taking a test is, itself, a learning experience. See “The Most Effective Memory Methods are Difficult—and That's Why They Work.”

The questions will change each semester to rotate through different concepts, so that by the time a student is finished with an Economics degree, we will know how they fared on a reasonably wide range of concepts. Of course, how their knowledge deepens from year to year is also of interest, so some questions are likely to be asked in a higher fraction of semesters.

An exit survey will ask students about their experience and measure things that a quiz can’t.

In addition to asking about students’ experience with their classes, we can ask about what job they have next.

We also talked about the possibility of having at least one essay question on the substance of economics on this survey. (We would then pay some of our graduate students to grade it.) As we are thinking of things now, this, too, would be no-stakes. Nothing would happen to the student if they had a bad essay.

In order to get a good response rate, we hope to make completing the exit survey a requirement for graduation.

Instructors will report students’ involvement in activities that involve integrative skills. We hope to get data at the student level. For example, with each of these subdivided into “individually” and “in a group”:

writing

giving presentations

analyzing data

interpreting data analysis done by others

For the quiz, since I am the only macroeconomist on the committee, I have been thinking about macroeconomic concepts I would want students to know, as well as microeconomic concepts that are especially important to macro where there is some variance in what students are taught. A department-wide quiz meant to measure students’ long-run learning has two somewhat distinct purposes:

to see if students are learning what we are trying to teach them.

to nudge faculty to try to teach students important concepts that may be neglected or distorted.

If there is a reason for concern that a concept might be getting neglected in the overall curriculum, it doesn’t need to be quite as important a concept to warrant inclusion in the quiz. If it is concept to which we know a lot of teaching effort is being devoted, then it has to be a very important concept to be included.

Here are some of the concepts that are on my wishlist to test students knowledge of in the department-wide quizzes. First, here are some concepts I worry may be getting neglected or distorted:

Positive interest rates are when, overall, the borrower is paying the lender for the use of funds. Negative interest rates are when, overall, the lender is paying the borrower for taking care of funds.

Central banks like the Fed change interest rates not only by changing the money supply, but also by directly changing the interest rates they pay and the interest rates at which they lend.

Ordinarily, the main job of central banks like the Fed is to keep the economy at the level of output and employment that leaves inflation steady.

When central banks cut interest rates it stimulates the economy (a) by shifting the balance of power in terms of what they can afford toward those who want to borrow and spend relatively to those who want to save instead of spend and (b) by giving everyone, both borrowers and lenders, an extra incentive to spend if they can afford to. Raising interest rates does the reverse.

Measures other than interest rate changes that affect consumption, investment or government purchases can substitute for interest rate changes in stimulating or reining in spending. These may be important instruments of policy if either (a) they act faster than interest rate changes or (b) interest rates are a matter of concern for reasons beyond their effect on overall spending.

A key determinant—may economists argue the key determinant—of the balance of trade is the decisions of the domestic government, firms and households about whether and how much to buy of foreign assets (assets denominated in another currency) and the decisions of foreign governments, firms and households about whether and how much to buy of domestic assets.

“Capital requirements” require banks to be getting a certain minimum fraction of their funding from stockholders as opposed to from borrowing. Thus, they could also be called “equity requirements.” Many of the economists concerned about financial stability argue that high levels of bank borrowing that led to low fractions of stockholder equity contributed to the Financial Crisis in 2008 and that higher capital (equity) requirements are an important measure to reduce the chances of another serious financial crisis.

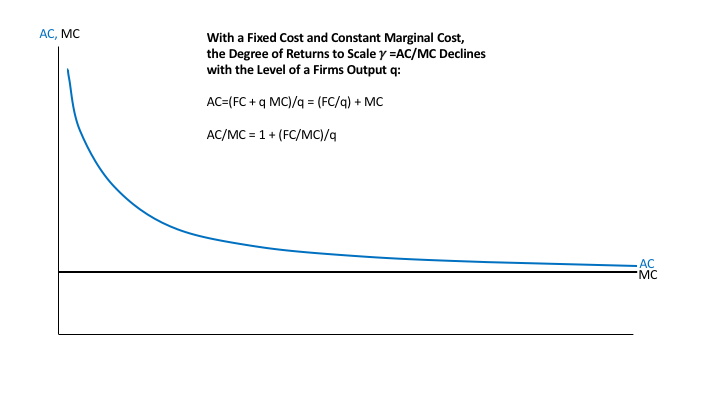

The replication argument highlights the fact that any claim of decreasing returns to scale can be seen as one of three things: (a) a factor of production that is being held fixed, or is not scaling up along with everything else, (b) the price of a factor of production going up as production is expanded or (c) something like an integer constraint. See “There Is No Such Thing as Decreasing Returns to Scale.”

I have good reason to worry about the last, number 8. Both in formal classroom visits and informally as I glance in classrooms with open doors, I know enough about what is being taught in the microeconomics classes to think that (in large measure because of the nature of most micro textbooks) they may talk about decreasing returns to scale without being clear about the replication argument. I won’t belabor this here because I have made my case in “There Is No Such Thing as Decreasing Returns to Scale.” But it is something I feel strongly about.

As for important concepts that I have reason to think we put a lot of effort into teaching, but need to see if our efforts are working, let me go with the ten concepts Greg Mankiw lays out in the first chapter of his Principles of Economics textbook. Greg’s words are in bold, my commentary on each one follows.

People face tradeoffs. This is truly fundamental to economics. I can’t tell you how many times it helped me think through an issue for a blog post to say “The pluses of this policy are …. The minuses are ….” Besides helping students understand economics, the principle that there are pluses and minuses to almost everything will help them be fairminded. It means one should listen respectfully to others since, even if you ultimately decide they are wrong on a decision overall, they may help identify a minus to the decision you wanted to recommend, which may help you identify what you think is a better choice than your initial idea. (That better choice still may not be the choice they want).

The cost of something is what you give up to get it. I taught this as thinking clearly of two different, mutually exclusive choices and laying out every aspect in which the two situations are different. The cost of one choice is not being able to make other mutually exclusive choices. Part of the art of economics is identifying which other choices should be compared to any given choice.

Rational people think at the margin. I am not altogether happy with Greg’s use of the word “rational” here. The trouble with the word “rational” is that it has too many meanings. It is fine in context. But in a very broad-ranging discussion, “rational” needs to be replaced by “obeys this axiom.” In economics, there are at least as many meanings to the word “rational” as there are attractive axioms for decision-making. For the students, I think it would be much better to say: “For any choice, often some of the most important alternative choices to compare it to are choices that are just a little different. This is called ‘thinking at the margin.’ Thinking at the margin allows us to use the power of calculus, though the basic ideas can be shown graphically, without calculus.”

People respond to incentives. In practical policy discussions, this principle is a great part of the value-added from having an economist in the room. A big share of the practical value of this principle is in identifying side-effects of policies. Economists are good at pointing out the (often unintended) incentives of a policy and the side effects those incentives will create. Intended incentives of policies are also important, but economists love thinking about incentives so much, they may overestimate the size of the effects of those intended incentives in the interval before solid evidence about the effect sizes for those incentives is available.

Trade can make everyone better off. Here, the economic concept is every bit as much about transactions within a country—trade between individuals—as transactions between countries. Logically, those are very similar. The good side of trade definitely needs to be taught. But pecuniary externalities are real. A and B trading can make it so I get a worse deal in trading with A. This doesn’t take away the principle that trade is vital for one’s welfare. (Barring a divided self) I always want to be able to freely trade myself. But to get a better deal in trading myself, I might want to interfere with other people trading. An interesting example of this insight is the minimum wage. It doesn’t serve any purpose for me for me to be subject to the minimum wage; I can already reject job offers whose wage was lower than I am willing to accept. But it might benefit me personally for other people to be subject to the minimum wage so that don’t compete with me and bid down the wage I can get.

Markets are usually a good way to organize economic activity. Here is my take on that, drawn from a cutout from my column “America's Big Monetary Policy Mistake: How Negative Interest Rates Could Have Stopped the Great Recession in Its Tracks.”

John von Neumann, who revolutionized economics by inventing game theory (before going on to help design the first atom bomb and lay out the fundamental architecture for nearly all modern computers), left an unfinished book when he died in 1957: The Computer and the Brain. In the years since, von Neumann’s analogy of the brain to a computer has become commonplace. The first modern economist, Adam Smith, was unable to make a similarly apt comparison between a market economy and a computer in his books, The Theory of Moral Sentiments or in the The Wealth of Nations, because they were published, respectively, in 1759 and 1776—more than 40 years before Charles Babbage designed his early computer in 1822. Instead, Smith wrote in The Theory of Moral Sentiments:

“Every individual … neither intends to promote the public interest, nor knows how much he is promoting it … he intends only his own security; and by directing that industry in such a manner as its produce may be of the greatest value, he intends only his own gain, and he is in this, as in many other cases, led by an invisible hand to promote an end which was no part of his intention.”

Now, writing in the 21st century, I can make the analogy between a market economy and a computer that Adam Smith could not. Instead of transistors modifying electronic signals, a market economy has individuals making countless decisions of when and how much to buy, and what jobs to take, and companies making countless decisions of what to buy and what to sell on what terms. And in place of a computer’s electronic signals, a market economy has price signals. Prices, in a market economy, are what bring everything into balance.

Governments can sometimes improve market outcomes. Here, one of the key practical points is that a blanket statement or attitude that government regulations are bad or that government regulations are good is unlikely to hold water. The devil is in the details. Enforcing property rights is fundamental to a free-market economy—and what it takes to enforce property rights can be viewed as a form of regulation. On the other hand, it is easy to find examples of bad regulations. One good way to identify bad regulations is to look for things that both (a) tell people they can’t do something they want to do, and therefore reduce freedom (most regulations do this) and (b) reduce the welfare of the vast majority of people. Many regulations are like this. They can exist because they benefit a small slice of people who influence the government to impose those regulations. (And it is easy to find regulations for which, any reasonable way of totaling up the benefit to that slice minus the cost to the vast majority of people will leave the regulation looking bad. For example, the dollar benefits and costs may make the regulation look bad, and it may benefit relatively rich people at the expense of poorer people.)

A country’s standard of living depends on its ability to produce goods & services. Here the thing I want to add as a key concept for the students is that the ability to produce goods and services has increased dramatically in the last 200 to 250 years, and that technological progress continues now at a rate that rivals the rate at which it improved during the Industrial Revolution. Many voters are grumpy in part because the ability to produce goods and services is not improving as rapidly as it did in the immediate postwar era from 1947–1973 and in the brief period from 1995–2003, but is still improving substantially each decade. The late Hans Rosling has a wonderful four minute video I run in class for my students showing the dramatic improvements in per capita income and health across the world in the last century.

Prices rise when the government prints too much money. My main perspective on this is that I want students to know that central banks are especially responsible for the unit of account function of money. If they do a bad job, money serves as a bad unit of account. I have a blog post giving my views on “The Costs of Inflation.” One small point: I always stress to students that the Fed doesn’t literally print money. What it does is to create money electronically—as a higher number in an account on a computer. That higher number in an account on a computer than gives the account holder the right to ask for more paper currency. That paper currency is printed by the Mint. Then each regional Federal Reserve Bank gets enough paper currency from the Mint to take care of any anticipated requests for paper currency by those whose “reserve accounts” or other accounts with the Fed give them the right to ask for paper currency.

Society faces a short-run tradeoff between inflation and unemployment. Under this heading, I would include basic concepts about aggregate demand—how much people, firms and the government want to spend. When prices or wages are sticky, people wanting to spend more leads to more getting produced. When more is being produced, unemployment goes down. In a theoretical world unlike the one we live in, in which prices and wages were perfectly flexible, people wanting to spend more would lead to higher prices, with no change it what gets produced. That theoretical world is relevant because it is probably a reasonable description of what happens in the long-run. The transition from what happens in the short-run to what happens in the long-run means that higher aggregate demand (higher desired spending) will raise output in the short-run and raise prices in the long run, relative to what they would have been otherwise. How fast prices rise is an area of debate.

I am excited about measuring what students have learned in a department-wide way, and firmly of the view that what we should most hunger to measure is how much stays learned even years after students have taken a class. I am confident that the truth about how much students have learned in the long-term sense will be bracing and will lead to a more realistic sense of how many concepts can be taught and a greater interest in designing and implementing more engaging ways to help students learn the very most important concepts in economics.

I have two columns very closely related to this post:

You also might be interested in my move to the University of Colorado Boulder:

Increasing Returns to Duration in Fasting

I am in the middle of my annual anti-cancer fast. (See “My Annual Anti-Cancer Fast.”) That has led me to think about returns to scale—in this case, returns to duration—in fasting.

Cautions about Fasting.

Before I dive into the technical details, let me repeat some cautions about fasting. I am not going to get into any trouble for telling people to cut out added sugar from their diet, but there are some legitimate worries about fasting. Here are my cautions in “Don't Tar Fasting by those of Normal or High Weight with the Brush of Anorexia”:

If your body-mass-index is below 18.5, quit fasting! Here is a link to a BMI calculator.

Definitely people should not do fasting for more than 48 hours without first reading Jason Fung’s two books The Obesity Code (see “Obesity Is Always and Everywhere an Insulin Phenomenon” and “Five Books That Have Changed My Life”) and The Complete Guide to Fasting.

Those under 20, pregnant or seriously ill should indeed consult a doctor before trying to do any big amount of fasting.

Those on medication need to consult their doctor before doing much fasting. My personal nightmare as someone recommending fasting is that a reader who is already under the care of a doctor who is prescribing medicine might fail to consult their doctor about adjusting the dosage of that medicine in view of the fasting they are doing. Please, please, please, if you want to try fasting and are on medication, you must tell your doctor. That may involve the burden of educating your doctor about fasting. But it could save your life from a medication overdose.

Those who find fasting extremely difficult should not do lengthy fasts.

But, quoting again from “4 Propositions on Weight Loss”: “For healthy, nonpregnant, nonanorexic adults who find it relatively easy, fasting for up to 48 hours is not dangerous—as long as the dosage of any medication they are taking is adjusted for the fact that they are fasting.”

Let me add to these cautions: If you read The Complete Guide to Fasting you will learn that fasting more than two weeks (which I have never done and never intend to do) can lead to discomfort in readjusting to eating food when the fast is over. Also, for extended fasts, you need to take in some minerals/electrolytes. If not, you might get some muscle cramps. These are not that dangerous but are very unpleasant. What I do is simply take one SaltStick capsule each day.

Health Benefits of Fasting.

That said, appropriate fasting is a very powerful boost to health. See for example,

Let me be clear that I am talking about fasting by not eating food, but continuing to drink water (or tea or coffee without sugar). I haven’t come across any claim of a health benefit from not drinking water during fasting, as is urged for religious reasons in both Islamic and Mormon fasting. And there are many reasons to think that drinking a lot of water is good for health.

Fasting is also the central element in losing weight and keeping it off. I have often emphasized the importance of eating a low-insulin-index diet; the most important reason to eat a low-insulin-index diet is that it makes fasting easier. Indeed a famous experiment during World War II involved feeding conscientious objectors a small amount of calories overall in a couple of meals a day that were high on the insulin index. This caused enormous suffering. Don’t do this! In my experience, it is much easier to eat nothing than to eat a few high-insulin-index calories a day. The current top six posts in my bibliographic post “Miles Kimball on Diet and Health: A Reader's Guide” are clear about the importance of fasting:

Also, there is reason to think fasting can prevent cancer:

Increasing Returns to Duration in Fasting.

People differ in their tolerance for fasting. In “Forget Calorie Counting; It's the Insulin Index, Stupid” I talk in some detail about how to do a modified fast. Everything I say here about returns to duration applies to modified fasts as well as more complete fasts. The main difference is that a modified fast has a higher number of calories consumed than in a complete fast, which has zero calories consumed. While calories in/calories out thinking is quite unhelpful to people making incremental changes to the typical American diet, when your insulin level is as low as it is during an extended fast or a modified fast, calories in/calories out thinking is a better guide. (See “Maintaining Weight Loss” and “How Low Insulin Opens a Way to Escape Dieting Hell.)

The reason I think fasting should have increasing returns to duration is all about glycogen. Glycogen is an energy-storage molecule in your muscles and liver. It is the quick-in, quick-out energy storage molecule, while body fat is slow-in, slow-out energy storage. In the first day or two of fasting, much of the energy you need will be drawn from your glycogen stores. It is only as your glycogen stores are run down that the majority of the energy you need will be drawn from your body fat.

Moreover, based on my own experience, I theorize that when you end your fast and resume eating, you will have an enhanced appetite in order to replenish your glycogen stores. By contrast, I think of the amount of body fat having a weaker effect on appetite. (Though likely weaker, it is an important effect. See “Kevin D. Hall and Juen Guo: Why it is so Hard to Lose Weight and so Hard to Keep it Off.”)

The bottom line of this view is that, for weight loss or maintenance of weight loss, the time it takes to deplete glycogen by fasting is like a fixed cost. Then, after the first couple of days, you should be able to burn something like .6 pounds of body fat per day while fasting. I got that figure from approximating the number of calories in a pound of body fat by 3600, and assuming 2160 pounds burned per day because those who regularly fast get an efficient metabolism (which I think has good anti-cancer properties). This online article says that the average American woman and man, who likely have relatively inefficient metabolisms, burn respectively 2400 and 3100 calories per day.

The “fixed cost” of glycogen burning that substitutes at the beginning for body-fat burning may not literally happen all at the beginning. There is probably some fat-burning early on, but every calorie drawn from glycogen burning isn’t drawn from fat burning, so fat burned in the first couple of days is less than it is during later days. After a few days, glycogen reserves will be gone so that all calories will have to come from fat burning. The theory I am propounding is that the glycogen reserves will bounce back fast when you resume eating, but that body-fat burned will be a relatively long-lasting effect.

When your body is primarily burning fat rather than glycogen, you are said to be in a state of “ketosis.” Those on “keto” diets try to get to fat burning faster by eating so few carbs and so much dietary fat that it is hard for their bodies to replenish glycogen stores—which replenish most easily from carbs. To me, keto diets are a little extreme on what you eat. I would rather eat a wider variety of foods and rely on fasting to get me into ketosis.

Let me say a little more about “keto.” First, many “keto” products are very useful products for those on a low-insulin-index diet. And in terms of explaining what you are doing, “keto” may communicate a reasonably approximation to people who don’t know the term “low-insulin-index diet.” Of course there are people who don’t know “keto” either. For them, “lowcarb” has to do, despite all of its inaccuracy. I have this pair of blog posts comparing a low-insulin-index diet (which is what I recommend to complement fasting), a keto diet and a lowcarb diet:

The bottom line of this post is that, for those who can tolerate either fasting or modified fasting, fasting is a magic bullet for weight loss. (If you want a wonkish discussion of this, see “Magic Bullets vs. Multifaceted Interventions for Economic Stimulus, Economic Development and Weight Loss.”)

For annotated links to other posts on diet and health, see:

John Locke: The People are the Judge of the Rulers

In the last four sections of the last chapter (“Of the Dissolution of Government”) of his 2d Treatise on Government: Of Civil Government, John Locke answers the question of “Who should be the judge of whether rulers have overstepped their bounds?” thus: “the body of the people.” John Locke gives an excellent reason for why: rulers are the trustees or deputies of the people.

John Locke also poses and answers another hard question: What if a ruler refuses to be judged by the people? He says that if a ruler refuses to be judged by the people, then anyone who judges that a ruler has overstepped his or her bounds may consider themself to be at war with that ruler. I think John Locke would consider this provision not to apply in a democracy in which regular free elections can topple a ruler.

§. 240. Here, it is like, the common question will be made, Who shall be judge, whether the prince or legislative act contrary to their trust? This, perhaps ill-affected and factious men may spread amongst the people, when the prince only makes use of his due prerogative. To this I reply, The people shall be judge; for who shall be judge whether his trustee or deputy acts well, and according to the trust reposed in him, but he who deputes him, and must, by having deputed him, have still a power to discard him, when he fails in his trust? If this be reasonable in particular cases of private men, why should it be otherwise in that of the greatest moment, where the welfare of millions is concerned, and also where the evil, if not prevented, is greater, and the redress very difficult, dear, and dangerous?

§. 241. But farther, this question, (Who shall be judge?) cannot mean, that there is no judge at all: for where there is no judicature on earth, to decide controversies amongst men, God in heaven is judge. He alone, it is true, is judge of the right. But every man is judge for himself, as in all other cases, so in this whether another hath put himself into a state of war with him, and whether he should appeal to the Supreme Judge, as Jephtha did.

§. 242. If a controversy arise betwixt a prince and some of the people, in a matter where the law is silent, or doubtful, and the thing be of great consequence, I should think the proper umpire, in such a case, should be the body of the people: for in cases where the prince hath a trust reposed in him, and is dispensed from the common ordinary rules of the law; there, if any men find themselves aggrieved, and think the prince acts contrary to, or beyond that trust, who so proper to judge as the body of the people, (who, at first, lodged that trust in him) how far they meant it should extend? But if the prince, or whoever they be in the administration, decline that way of determination, the appeal then lies no where but to heaven; force between either persons, who have no known superior on earth, or which permits no appeal to a judge on earth, being properly a state of war, wherein the appeal lies only to heaven; and in that state the injured party must judge for himself, when he will think fit to make use of that appeal, and put himself upon it.

In very last section of his 2d Treatise on Government: Of Civil Government John Locke declares himself a constitutionalist in the sense that once a form of government is in place that sets proper bounds on rulers, the constitutional forms already established must be followed, except “when by the miscarriages of those in authority it is forfeited.”

§. 243. To conclude, The power that every individual gave the society, when he entered into it, can never revert to the individuals again, as long as the society lasts, but will always remain in the community; because without this there can be no community, no commonwealth, which is contrary to the original agreement: so also when the society hath placed the legislative in any assembly of men, to continue in them and their successors, with direction and authority for providing such successors, the legislative can never revert to the people whilst that government lasts; because having provided a legislative with power to continue for ever, they have given up their political power to the legislative, and cannot resume it. But if they have set limits to the duration of their legislative, and made this supreme power in any person, or assembly, only temporary; or else, when by the miscarriages of those in authority it is forfeited; upon the forfeiture, or at the determination of the time set, it reverts to the society, and the people have a right to act as supreme, and continue the legislative in themselves; or erect a new form, or under the old form place it in new hands, as they think good.

Throughout his 2d Treatise on Government: Of Civil Government John Locke is insistent that rulers, too, are subject to the rule of law. And the rule of civil law is in turn subject to the preexisting law of nature.

For links to other John Locke posts, see these John Locke aggregator posts:

A New Advocate for Negative Interest Rate Policy: Donald Trump

I was surprised last week to see Donald Trump directly advocate negative interest rates. You can see his tweet below, along with my reaction.

Donald Trump also reacted to the European Central Bank’s cut in a key interest rate from negative .4% to negative .5% (along with other measures the ECB intends to be stimulative):

Here is my reaction, both to the ECB’s action and to Donald Trump’s tweet about it:

My note of approval for Donald’s tweet about the ECB’s action struck Ivan Werning as too much:

Ivan is one of the economists I most respect in all of the world, so I felt a bit distressed and offered an extended explanation and defense of what I had written, and Ivan added some more reactions:

Along the way, I had some other Twitter convos about Trump, negative rates and eurozone negative rate policy:

Here is some other description and commentary I gave of the ECB’s action:

But my favorite bit of Twitter commentary on the ECB’s action was not my own. It was from “Doo B. Doo”:

Noah Smith on Tanya McDowell Being Sentenced to Five Years in Prison for Sending Her Child to the Wrong Public School →

This was part of a plea deal when she faced other serious charges. But it is outlandish that it was even possible for prosecutors to put together a plea deal with a 5-year prison sentence for a homeless person sending a child to the wrong public school.

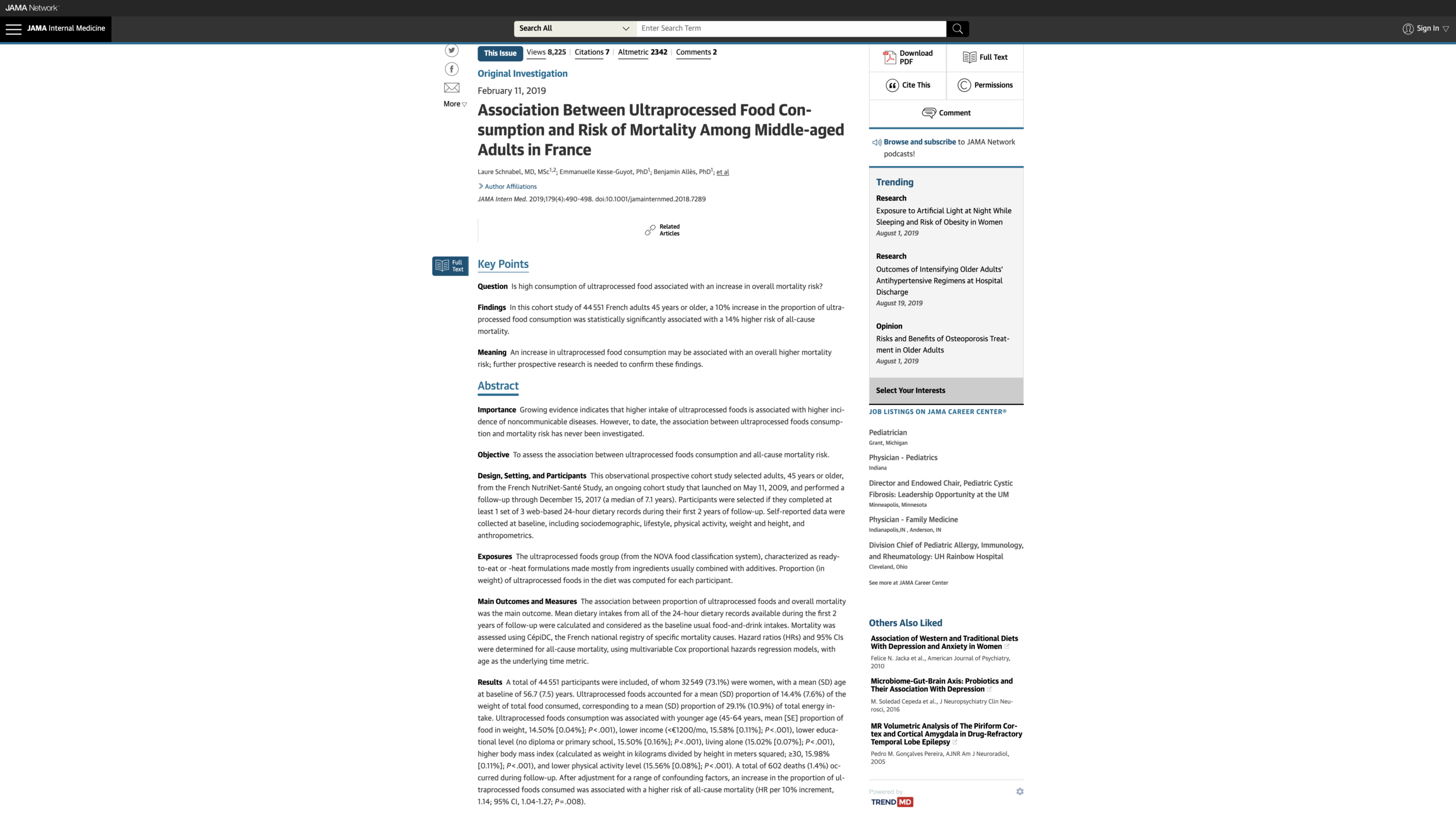

Eating Highly Processed Food is Correlated with Death

In “Hints for Healthy Eating from the Nurses’ Health Study” I write:

The trouble with observational studies of diet and health that don't include any intervention is the large number of omitted variables that are likely to be correlated with the variables that are directly studied. Still, it is worth knowing for which things one can say:

Either this is bad, or there is something else correlated with it that is bad.

When multivariate regression is used, one might be able to strengthen this to

Either this is bad, or there is something else bad correlated with it that is not completely predictable from the other variables in the regression.

in discussing “Association Between Ultraprocessed Food Consumption and Risk of Mortality Among Middle-aged Adults in France” by Laure Schnabel, Emmanuelle Kesse-Guyot and Benjamin Allès, I need to go further to elaborate on my interpretation for multivariate regression results that show a coefficient in the undesirable direction for “this”:

Either this is bad, or there is something else bad correlated with it that is not completely predictable from the other variables in the regression.

To make sure the message isn’t lost, let me say this more pointedly: In observational studies in epidemiology and the social sciences, variables that authors say have been “controlled for” are typically only partially controlled for. The reason is that almost all variables in epidemiological and social science data are measured with substantial error. Although things can be complicated in the multivariate context, typically variables that are measured with error get an estimated coefficient smaller than the underlying true relationship. (A higher coefficient multiplies the noise by a bigger number, and that bigger coefficient multiplying the noise is penalized by ordinary least squares, since ordinary least squares is looking for the best linear unbiased predictor, and noise multiplied by a big coefficient hinders prediction.) If the estimated coefficient on a variable meant to control for something is smaller than the true relationship with the true variable underneath the noise, then the variable is only partially controlled for. The only way to truly control for a variable is to do a careful measurement-error model. As a practical matter, anyone who doesn’t mention measurement error and how they are modeling measurement error is almost always not fully controlling for the variables they say they are controlling for.

I like to think of an observed variable as partially capturing the true underlying variable. When, because of measurement error, an observed variable only partially captures the true underlying variable, simply including that variable in multivariate regression will only partially control for the true underlying variable.

If the coefficient of interest is knocked down substantially by partial controlling for a variable Z, it would be knocked down a lot more by fully controlling for a variable Z. It is very common in epidemiology and social science papers to find statements like: “We are interested in the effect of X on Y. Controlling for Z knocks the coefficient on X (the coefficient of interest) down to 2/3 of the value it had without that control, but it is still statistically significantly different from zero.” This is quite worrisome for the qualitative conclusion of interest, because if measurement error biases the coefficient of Z down to only half of what the underlying relationship is, fully controlling for Z using a measurement-error model would be likely to reduce the coefficient of interest by about twice as much, and a coefficient on X that was 1/3 of the size without controls might well be something that could easily happen by chance—that is, not statistically significantly different from zero.

Let’s turn now to the fact that eating highly processed food is positively correlated with mortality. Personally, my prior is that eating highly processed food does, in fact, increase mortality risk. So the statistical point I am making is questioning the strength of the statistical evidence from this French study for a proposition I believe. But ultimately, understanding the statistical tools we use will get us to the truth—and, I believe, through knowing the truth—to a better world.

One of the controls Laure Schnabel, Emmanuelle Kesse-Guyot and Benjamin Allès use is overall adherence to dietary recommendations by the French government (the Programme National Nutrition Santé Guidelines). The trouble is that the true relationship between eating highly processed food and eating badly in other ways is likely to be stronger than what can be shown by the imperfect data they have. That means that, at the end of the day, it is hard to tell whether the extra mortality is coming from the highly processed food or from other dimensions of bad eating that are correlated with a lot of highly processed food. Another set of controls are income and education. Even if the income and education variables measured francs earned last year and number of years of schooling perfectly, what is really likely to be related to people’s causally health-related behavior is probably something more like permanent income on the one hand, and knowledge of health principles on the other—which would depend a lot on dimensions of education such as college major and learning on the job in a profession as well as years of school. Hence, all the things that might stem being poor in the sense of low permanent income (low income not just one particular year, but chronically) and having a low knowledge of health principles are undercontrolled for when they are representative only by typical income and education data. The same kind of argument can be made about controlling for exercise: for health purposes, there are no doubt higher/lower-quality dimensions to exercise that are not fully captured by the exercise data in the French NutriNet-Santé Study that Laure, Emmanuelle and Benjamin are using. If exercise quality were better measured, controlling for more dimensions of exercise would likely knock the coefficient of ultraprocessed food consumption down a bit more.

The bottom line is that there is definitely something about what people who eat a lot of highly processed food do, or about the situations people who eat a lot of highly processed food are in that leads to death, but it is not clear that the highly processed food itself is doing the job. Highly processed food might have been merely driving the getaway car rather than firing the bullet that accomplished the hit job. Highly processed food is clearly hanging out with some bad actors if it didn’t fire the gun, but it is hard to convict it of committing the crime itself.

In absence of clearcut statistical evidence of causality, theory becomes important in helping to establish priors that will affect how one reads ambiguous data. I lay out theoretical reasons for being suspicious of processed food in “The Problem with Processed Food.” One of the problems with processed food is its typical reliance on sugar in some form to make processed food tasty. Laure Schnabel, Emmanuelle Kesse-Guyot and Benjamin Allè given other theoretical reasons to worry about processed food in this passage (from which I have omitted the many citation numbers that pepper it for the sake of readability):

First, studies have documented the carcinogenicity of exposure to neoformed contaminants found in foods that have undergone high-temperature processing. The European Food Safety Authority stated in 2015 that acrylamide was suspected to be carcinogenic and genotoxic, and the International Agency for Research on Cancer classified acrylamide as “probably carcinogenic to humans” (group 2A). Some studies reported a modest association between dietary acrylamide and renal or endometrial cancer risk. Further research is necessary to confirm these speculative hypotheses. Similarly, meat processing can produce carcinogens. The International Agency for Research on Cancer reported in 2015 that processed meat consumption was carcinogenic to hu- mans (group 1), citing sufficient evidence for colorectal cancer. Moreover, the agency found a positive association be- tween processed meat consumption and stomach cancer.

In addition, ultraprocessed foods are characterized by the frequent use of additives in their formulations, and some studies have raised concerns about the health consequences of food additives. For instance, titanium dioxide is widely used by the food industry. However, findings from experimental studies suggest that daily intake of titanium dioxide may be associated with an increased risk of chronic intestinal inflammation and carcinogenesis. Likewise, experimental studies have suggested that consumption of emulsifiers could alter the composition of the gut microbiota, therefore promoting low-grade inflammation in the intestine and enhancing cancer induction and metabolic syndrome. In addition, some findings suggest that artificial intense sweeteners could alter microbiota and be linked with the onset of type 2 diabetes and metabolic diseases, which are major causes of premature mortality.

Food packaging is also suspected to have endocrine-disrupting properties. During storage and transportation of food products, chemicals from food-contact articles can migrate into food, some of which might negatively affect health, such as bisphenol A. Epidemiologic data have suggested that endocrine disruptors are associated with an increased risk of endocrine cancers and metabolic diseases, such as diabetes and obesity.

After the passage of time has led to more deaths and therefore increased the statistical power available from the French data set, one way to test the importance of these forces will be to look at the association of the consumption of highly processed food with different causes of death. For example, it would be tantalizing evidence about causal channels if eating ultraprocessed food predicted a higher risk of death due to cancer by a bigger ratio than the ratio by which it predicted a higher risk of death due to cardiovascular disease.

What are highly processed foods—or in term used by the authors, “ultraprocessed foods”? Laure, Emmanuelle and Benjamin write:

Each of the 3000 foods in the NutriNet-Santé Study composition table was classified according to the NOVA food classification system, which categorizes food products into 4 groups according to the nature, extent, and purpose of processing. This current study focused on 1 group classified as ultraprocessed foods, which are manufactured industrially from multiple ingredients that usually include additives used for technological and/or cosmetic purposes. Ultraprocessed foods are mostly consumed in the form of snacks, desserts, or ready-to-eat or -heat meals.

I have been struck by how cutting out sugar almost automatically cuts out the vast majority of highly processed foods. So it is not easy to tell apart harm from sugar and harm from highly processed foods. (I give tips for going off sugar in “Letting Go of Sugar.”) But it also means that currently it is not that important for one’s own personal efforts to avoid early death to distinguish between the harms of sugar and the harm from highly processed food. (In the future, it might become extremely important to distinguish between the two if large number of people started avoiding sugar and food companies started reformulating their processed food to leave out sugar.)

My recommendation is to cut back on sugar and highly processed foods—efforts in which there are many opportunities to kill both birds—sugar and processed food—with one stone. For more detailed recommendations on good and bad foods, see:

Using the Glycemic Index as a Supplement to the Insulin Index

What Steven Gundry's Book 'The Plant Paradox' Adds to the Principles of a Low-Insulin-Index Diet

For annotated links to other posts on diet and health, see:

Chris Kimball: The Language of Doubt

A pond and stand of aspens near Chris’s home

I am pleased to have another guest post on religion from my brother Chris. (You can see other guest posts by Chris listed at the bottom of this post.) In what Chris has written below, he is wrestling not just with what he thinks and feels about Mormonism, but also with what he thinks and feels about Christianity and about belief in God itself.

The Skeptic

I am a skeptic, an empiricist, a Bayesian. These are not moral statements or value judgments. They are simply observations about how and whether I know anything. Essentially a matter of epistemology.

This is me, and a few other people I know. I’m sure many people think differently and that’s OK. I know there are discussions about the nature of the world, of human beings generally. Interesting discussion. Ones I’m not equipped to argue but interested to listen. This is not about the general case. Just about me.

A skeptic questions the possibility of certainty or knowledge about anything (even knowledge about knowing). An empiricist recognizes experience derived from the senses. A Bayesian views knowledge as constantly updating degrees of belief. In a functional sense, in the way it works in my life, I only know anything as a product of neurochemicals and hormones in the present.

In science, in law, in everyday life, being a skeptic is not a big deal; it is even usual or typical to talk like a skeptic. But in religion it is a big deal.

The Spirit: When the topic of doubt comes up, a common biblical reply is that the Spirit is the ultimate witness. “The Spirit beareth witness with our spirit” (Romans 8:16 KJV). I don’t suppose everybody has the same idea what that means; I hear it as a Platonic ideal of Truth or Knowledge, and a dualist body-soul where God or the Spirit speaks directly to the soul in some form of indisputable ultimate knowledge. “For to one is given by the Spirit the word of wisdom; to another the word of knowledge by the same Spirit.” 1 Corinthians 12:8

However this works for others, it doesn’t work for me. I don’t recognize any Spirit-to-soul ultimate knowledge communication. Only neurochemicals and hormones. I am reasonably satisfied there is an external world that impinges on my senses. I am willing to make room for an external supernatural world, although I am more likely categorized agnostic than believing. But the external world, natural or supernatural, ultimately registers through neurochemicals and hormones. There is no back channel, no source of certainty.

Testing Faith: Sometimes religious people talk about overcoming doubt with tested faith. “That the trial of your faith, being much more precious than of gold that perisheth, though it be tried with fire, might be found unto praise and honour and glory at the appearing of Jesus Christ.” 1 Peter 1:7. A Mormon version is the frequently referenced Alma 32:24, which compares the word to a seed, which if given place will begin to swell, “and when you feel these swelling motions, ye will begin to say within your selves—It must needs be that this is a good seed, or that the word is good.” Plant the seed, watch it grow, come to “know” by proof of results.

I do in fact notice that giving place for a seed can lead to good feelings and positive experiences. However, cause and effect are mysterious to me, pattern searching is a real phenomenon, confirmation bias happens. In other words, I feel the swelling motions, but I never get to “must needs be.” The “must” is forever elusive.

Sensus Divinitatis: French Protestant reformer John Calvin used the term sensus divinitatis (“sense of divinity”) to describe a hypothetical human sense:

That there exists in the human mind and indeed by natural instinct, some “sensus divinitatis,” we hold to be beyond dispute, since God himself, to prevent any man from pretending ignorance, has endued all men with some idea of his Godhead … [T]his is not a doctrine which is first learned at school, but one as to which every man is, from the womb, his own master; one which nature herself allows no individual to forget. (John Calvin, Institutes of the Christian Religion, Vol I, Chapter III)

Not me. It has been suggested that this sense does not work properly in some humans due to sin. (Alvin Plantinga, Warranted Christian Belief). I don’t believe it, but that is certainly a theory I have heard in Mormon circles as well.

Gift of the Holy Ghost: The way Mormons often talk about the gift of the Holy Ghost sounds a lot like Calvin’s sensus divinitatis, including Plantinga’s theory that the sense may not work due to sin. From The Church of Jesus Christ of Latter-day Saints’ Gospel Principles, a reasonable indicator of how Mormons talk, even if not doctrine by some definitions:

The Holy Ghost usually communicates with us quietly. His influence is often referred to as a “still small voice” . . . The Holy Ghost speaks with a voice that you feel more than you hear . . . [T]he Holy Ghost will come to us only when we are faithful and desire help from this heavenly messenger. To be worthy to have the help of the Holy Ghost, we must seek earnestly to obey the commandments of God. We must keep our thoughts and actions pure. (Gospel Principles, Chapter 21: The Gift of the Holy Ghost, p. 123.)

I read the “thoughts and actions pure” worthiness requirement and recognize that saying “not me” can come across as a confession. But . . . not me.

In short, for me there is no back channel, there is no Spirit-to-soul communication, there is no Gift, that I recognize as anything more than neurochemicals and hormones. Everything I know or think I know is subject to the limitations and failings of mortality. I am not certain of my own memories, my perceptions, or my emotions. I recall “burning in the bosom” experiences; I have dreamed dreams; I have seen visions. Some of these experiences have changed my life. Some of these experiences feel fresh in memory because I tell stories about them. But where they came from and what they mean seem forever a matter of interpretation. I attach meaning in the present over the surface of uncertain memory of an inherently ambiguous experience. I don’t recognize a back door, a sysop or root, an access to indisputable ultimate knowledge. I am not certain, always and forever.

The Language of Doubt

As a skeptic, the language of “doubt” can be misleading or misconstrued. Doubt can be a simple synonym for skepticism. There is a sense in which I live in a state of doubt always and forever. Because it is too all-encompassing, “doubt” is not a useful concept for me. Therefore, I find it useful to expand the vocabulary and to use words like cynicism, belief, probability, and trust.

Cynicism: A friend once said “assume goodwill.” I’m sure it was not original with him, but it stuck as good advice, reinforced by the example of his good life.

The cynic does not assume goodwill. The cynic disbelieves the sincerity or goodness of human motives and actions. The cynic sees the natural man, the economic maximizer, the selfish gene, in human interaction. The cynic sees the church’s decisions and policies in terms of the collection plate or tithing receipts.

I know people who play the intellectual game of explaining everything in selfish self-centered terms. But I am convinced there is more--love and altruism and God and friendship and loyalty and long-term perspectives that extend beyond any one person’s lifetime. Therefore, I generally think I am not a cynic.

Probability and Belief: Belief sometimes sounds like a binary—you believe or you disbelieve. But it doesn’t have to be a binary. I experience life as a swarm of probabilities. It is not clear there is any propositional statement I could 100% agree or 100% disagree with.

I can adopt the language of belief and disbelief by taking high probability propositions and calling them belief, and low probability propositions and calling them disbelief. And there is substance here. It’s not all word play. It is very possible for my high probability propositions to correspond to your beliefs or certainties or knowledge. Living in a swarm of probabilities does not mean anything goes.

On the other hand, church people often use “I know” or “beyond a shadow of doubt” phrases and I struggle to fit in. Narrowly speaking, high probability is not the same as knowledge, and turning a highly probable proposition into an “I know” would feel like playing to the crowd—using the words the community expects. More broadly, living in a swarm of probabilities makes me constantly aware of uncertainty and I wonder whether there is anything in the nature of a statement about faith or belief about which I have enough confidence to consider the move to “I know.”

Trust: I find “trust” the most useful concept to structure my religious thinking and conversation. Trust feels like a principle of action. Is my confidence level sufficient to make choices or turn my life or make a commitment? That’s trust.

Instead of asking “do I believe in God?” or “does God exist?” the question becomes Ivan’s question (Ivan of Dostoevsky’s Brothers Karamozov) “How can I trust God if he allows the most unthinkable evils to destroy innocents like the little girl?” In formal terms, this is not a logical theodicy (is it rational or is there an explanation that makes sense?) It is not exactly the evidential theodicy (does the weight of evidence, including the amount of evil in the world, argue for or against God?) It is more like an existential or pastoral theodicy that asks whether God makes sense in my life, in my circumstances, in light of my pain?

Instead of asking “do I believe in Christ?”—an existence proof kind of question--the question becomes one of confidence in an atonement. Is the child Yeshua born of Mariam someone I can trust in as a Savior? Is there a Christology (a theology regarding the person, nature, and role of Christ), a soteriology (a doctrine of salvation), that makes sense to me? That motivates me? That I am willing to trust in? Enough that I am willing to take up the cross and follow?

All this leading up to the questions that arise when turning the lens of trust on the Church. As I think about trusting the Church, the catalog of standard truth claims do not strike me as very important. Instead, I think about questions like Do I trust leaders? Or, Which leaders do I trust? Do I trust the disciplinary system, the process by which some human representative judges my qualifications? Is the doctrine, the description of how God works, the Plan, a reliable representation of reality? Do I trust the history as taught in the standard curriculum? Do I trust the Church’s claim to effect salvation? Do I trust the process of extending callings or making assignments? Do I trust the Church as custodian of tithes and offerings?

For me, every one of these trust questions is thought-provoking. Not quickly answered by reference to truth claims or “I know” kinds of belief statements. For me these trust questions lead to a very nuanced relationship with God and Christ and the Church. Neither all in nor all out but forever tentative and questioning.

Don't miss these posts on Mormonism:

The Message of Mormonism for Atheists Who Want to Stay Atheists

How Conservative Mormon America Avoided the Fate of Conservative White America

The Mormon Church Decides to Treat Gay Marriage as Rebellion on a Par with Polygamy

David Holland on the Mormon Church During the February 3, 2008–January 2, 2018 Monson Administration

Also see the links in "Hal Boyd: The Ignorance of Mocking Mormonism."

Don’t miss these other guest posts by Chris:

Christian Kimball: Anger [1], Marriage [2], and the Mormon Church [3]

Christian Kimball on the Fallibility of Mormon Leaders and on Gay Marriage

In addition, Chris is my coauthor for

Don’t miss these Unitarian-Universalist sermons by Miles:

By self-identification, I left Mormonism for Unitarian Universalism in 2000, at the age of 40. I have had the good fortune to be a lay preacher in Unitarian Universalism. I have posted many of my Unitarian-Universalist sermons on this blog.

Noah Smith on Blocking Twitter Trolls →

I don’t do this, but I find Noah’s defense of what he does interesting. One of the view things I have written about “trolls” discusses them in a positive light:

Making the Monopsony Argument for Minimum Wages More Evidence-Based—José Azar, Emiliano Huet-Vaughn, Ioana Elena Marinescu, Bledi Taska and Till Von Wachter →

The monopsony argument for minimum wages is a sound one where it applies. It is not a good argument for a one-size-fits-all minimum wage like most minimum wage policies. And it points to a particular range of magnitudes for a minimum wage as helpful, not magnitudes outside that range. It is good to see some research aimed at making the monopsony argument more concrete and clarifying where it applies.

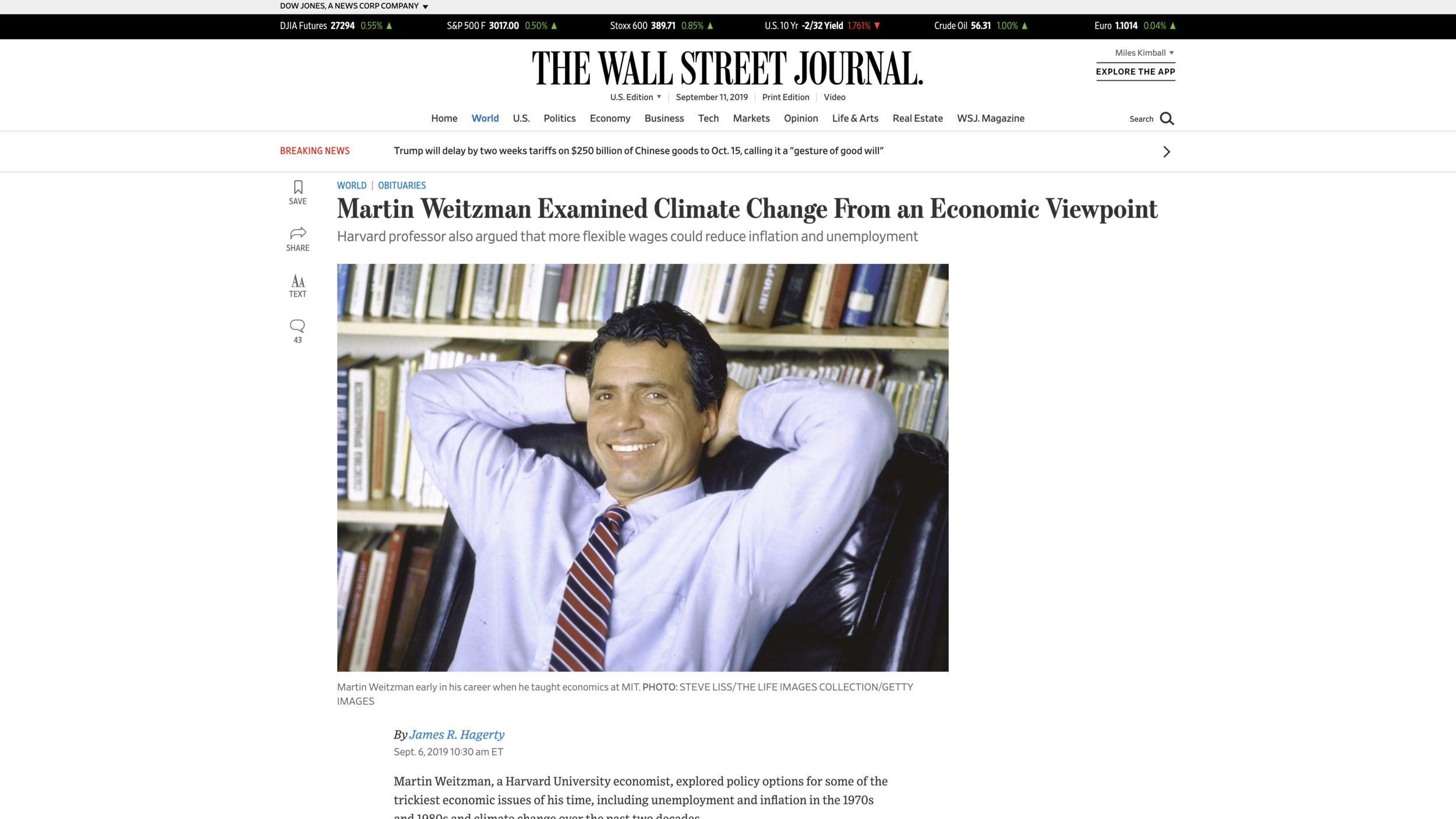

In Honor of Martin Weitzman

In “Why I am a Macroeconomist: Increasing Returns and Unemployment” I write:

… during the first few months of calendar 1985, I stumbled across Martin Weitzman’s paper “Increasing Returns and the Foundations of Unemployment Theory” in the Economics Department library. Marty’s paper made me decide to be a macroeconomist.

I am also a big fan of Martin’s book The Share Economy. It should be required reading for all macroeconomists. (I mention it in my posts “The Costs and Benefits of Repealing the Zero Lower Bound...and Then Lowering the Long-Run Inflation Target” and “The Equilibrium Paradox: Somebody Has to Do It.”)

I met Martin in person only when he came to give a seminar at the University of Michigan about how agnosticism about the true variance of asset prices could explain the equity premium puzzle. After a long argument over dinner, I came to think he was applying Humean skepticism to asset pricing, but that what really mattered was what investors actually had in their minds—which is something we can in principle establish with survey measures of expectations. (Existing survey measures of expectations on the Health and Retirement Study actually show that most people think the mean return on the stock market is quite low. The particular survey questions don’t really tell much about people’s expectations of the tail extremes.)

Suffice it to say that Martin Weitzman has had a big influence on me in my professional life. So I am saddened that he, like Alan Krueger (see “In Honor of Alan Krueger”) is dead at his own hand. The Wall Street Journal article shown above says tersely:

Prof. Weitzman died Aug. 27 in what the Massachusetts medical examiner determined was a suicide. He was 77 years old.

That article says this about The Share Economy:

His 1984 book, “The Share Economy,” caught the attention of politicians searching for ways to prevent the economy from lurching from periods of rapid inflation to spells of high unemployment. He believed inflationary pressures would abate and companies would be less likely to lay off workers in bad times if wages could be adjusted down as well as up, depending on profits.

“The major macroeconomic problems of our day can be traced back ultimately to the wage system,” he said at a political forum in 1987. “We try to award every worker a predetermined piece of the income pie before it’s out of the oven, before the size of the pie is even known.”

On his work on climate change, I like Holman Jenkin’s discussion in “CNN Climate Show Wasn’t Just Boring,” which also appeared on September 6, 2019. Holman writes:

… the shocking suicide of Harvard climate economist Martin Weitzman, rightly praised in obituaries for an insight lacking in the CNN town hall: A climate disaster is far from guaranteed. It’s the low but not insignificant chance of a “fat tail” worst-case disaster that we should worry about. (Mr. Weitzman put the odds at 3% to 10%.)

It comes as Weitzman’s student, collaborator and co-author, Gernot Wagner, tellingly has focused his own attention lately on geoengineering rather than the seemingly lost cause of carbon reduction.

The logic here is that if things get really, really bad, so that the world is getting as hot as hell, then we should not, and will not reject geoengineering out of hand. As Holman puts it:

So to answer CNN’s non-debate and the worries of the late Prof. Weitzman, if the small but not negligible chance of a climate catastrophe is borne out, we already know what the answer is going to be: to throw a bunch of particles into the atmosphere, at a cost of perhaps $2 billion a year, in order to block the estimated 1% of sunlight necessary to keep earth’s temperature in check.

Elizabeth Warren mentioned a policy action to tame climate change that Martin pushed forward: a carbon tax. Holman writes:

… Elizabeth Warren had an interesting moment when she admonished a network personality for trying to rile up viewers “around your light bulbs, around your straws and around your cheeseburgers.”

…

As the New York Times also noted, “For the first time, Ms. Warren explicitly embraced a carbon tax before quickly pivoting away . . .”

What’s Ms. Warren afraid of? A carbon tax would hardly be prohibitive. Weitzman advocated $40 a ton—the equivalent of 36 cents per gallon of gasoline. Such a tax could be implemented without raising the overall tax burden; it could be used to trim taxes on work, saving and investment, improving the economy overall. It could be embraced and copied by other nations out of self-interest rather than self-abnegation (unlike the absurd Green New Deal).

Short of a carbon tax or tradable carbon dioxide permits in limited supply, right now the most honest, effective way of reducing the amount of carbon dioxide we put into the atmosphere would be to ban the burning of coal worldwide. Politically, demonizing coal is an achievable and laudable goal that would do a lot more good than many, mostly symbolic policy measures that are being taken now.

What connects Martin’s work on taming climate change and his work on taming the business cycle was a penchant for attacking hard problems. The article at the top of this post says this:

“I’m drawn to things that are conceptually unclear, where it’s not clear how you want to make your way through this maze,” he said at a 2018 Harvard seminar honoring his career.

We need trailblazers like Martin. It is sad that we now have one fewer on our troubled planet.