The Relative Citation Ratio

A key problem with citation counts is that they disadvantage fields or subfields that are either small or have a culture of short reference lists. I am intrigued by the solution offered by B. Ian Hutchins, Xin Yuan, James M. Anderson, George M. Santangelo in their paper "Relative Citation Ratio (RCR): A New Metric That Uses Citation Rates to Measure Influence at the Article Level." This is a concept defined at the article level. At a senior hiring, tenure or promotion meeting, I would love to see annotation of the candidates curriculum vitae with relative citation ratios for each paper.

The key idea behind the relative citation ratio is defining a target article's subfield by the articles cocitation network: the set of papers that appear together in some paper's reference list along with the target article. This seems a great way to flexibly define the target article's subfield in a data-based way. It is also a way of defining the target article's subfield in a way that can evolve over time.

One advantage of taking an article's cocitation network as the comparison group for a relative citation ratio is that for articles that get cited at all, the cocitation network quickly becomes quite large, so there isn't a small numbers problem.

Conceptually, the idea of a relative citation ratio is to compare citations per year (not counting the calendar year of publication) for the target paper to average citations per year for the articles in the cocitation network. To make the denominator of the ratio even more stable, the authors substitute the average citations per year in the relevant journal for citations per year of a comparison article in the cocitation network.

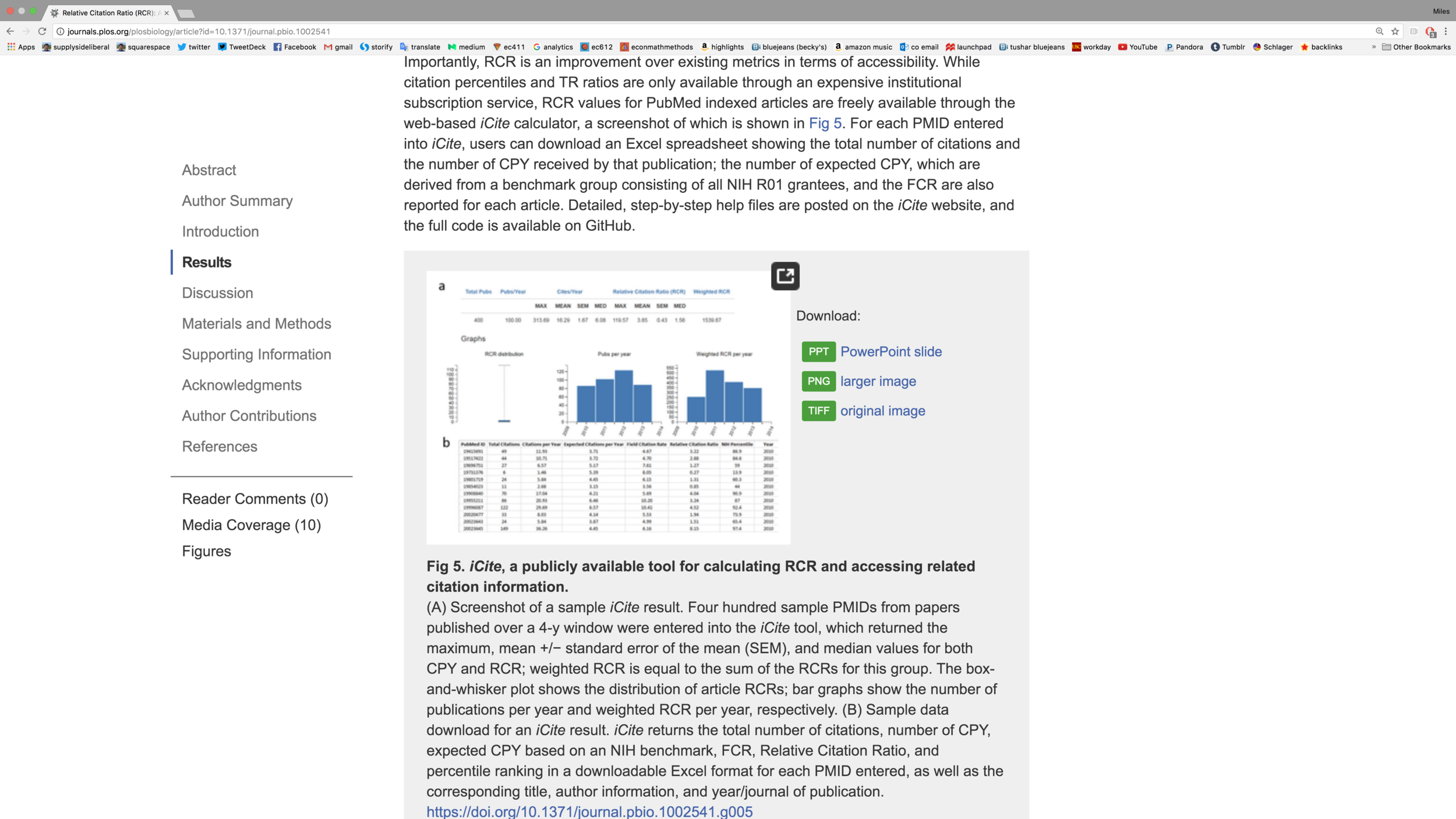

The authors B. Ian Hutchins, Xin Yuan, James M. Anderson and George M. Santangelo provide code for the calculation of the relative citation ratio, as well as the values of the relative citation ratio for every article in PubMed (where the National Institutes of Health requires all articles it supports to be posted). The image below illustrates their iCite tool for the relative citation ratio.

In addition to the intuitive appeal of the relative citation index, B. Ian Hutchins, Xin Yuan, James M. Anderson and George M. Santangelo report the results of several analyses that show the relative citation ratio is doing what one would hope it would do.

In addition to annotating CV's by relative citation ratios for each publication, I would like to see overall relative-citation-ratio-based measures of a scholar's overall productivity. These rankings can be used in various ways. For example, just as I pay attention to the pageviews on my blog, I also watch the evolution of my author rank by different measures on REPEC. And I occasionally cite the REPEC data for a relatively impartial measure of someone's scholarly prominence, as I did explicitly for Jeremy Stein in "Meet the Fed's New Intellectual Powerhouse" and implicitly for Andrei Shleifer in "Adam Smith as Patron Saint of Supply-Side Liberalism?"

I would love to see REPEC add measures of productivity based on the relative citation ratio to the many measures they have now.

- The sum of relative citation ratios for all of a scholar's publications

- The sum of (relative citation ratio/number of authors) for all of a scholar's publications

- The sum of relative citation ratios for all of a scholar's publications in a five-year window, say from calendar years t-6 through t-2, where t is the year of publication.

- The sum (relative citation ratio/number of authors) for all of a scholar's publications in a five-year window, say from calendar years t-6 through t-2, where t is the year of publication.

Measures 3 and 4 are related to the tough goal I hope tenured professors (who aren't working towards an even more important objective) strive for: to have a tenurable record for each five-year window after getting tenure as well as for the five-year (or sometimes longer) window that actually won them tenure.

I honestly don't know how I personally would fare by these measures. So this wish is driven by my curiosity, not by thinking it will necessarily make me look better. I tend to think I personally write in subfields with relatively high rates of citation and so would probably look a little worse by these measures than by some of the other citation-based measures. But maybe there are other subfields that have even higher rates of citation, so correcting for subfield could make me look relatively better.

There are limitations to any citation-based measure of scholarly productivity. But to the extent we end up looking at citation-based measures of scholarly productivity, those of us who love data can't help but want to stare at the best possible citation-based measures.